|

Getting your Trinity Audio player ready...

|

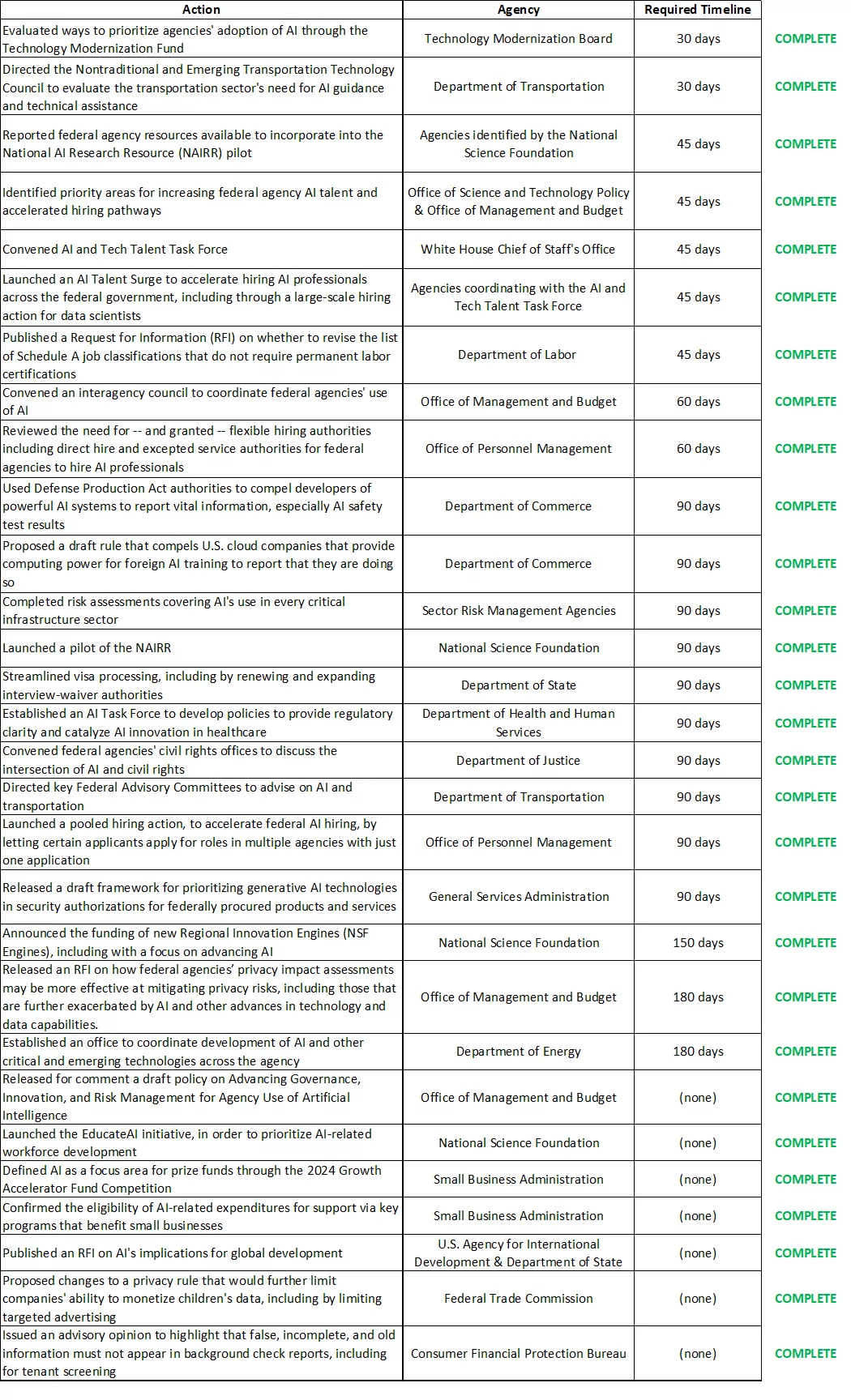

The Biden administration’s key actions around artificial intelligence (AI) outlined in its executive order have reached the 90-day deadline that the administration put in place for the actions to come to fruition. The order tasked various federal departments and agencies with a series of activities to increase AI safety, security, and privacy while catalyzing and democratizing innovation, education, and equality in the industry.

As we’ve reached this finish date, the White House has reported that each department was able to fully comply with the 90-day actions mandated by the order.

Key takeaways:

Under the Defense Production Act, the Biden Administration has taken steps to increase the oversight of artificial intelligence systems. Developers of sophisticated AI are now required to disclose information, such as safety test results, to the Department of Commerce. In addition, a draft rule that would require U.S. cloud service providers to report when they enable foreign entities to train powerful AI models is under consideration.

To help identify potential threats, the order also mandated that several government agencies submit risk assessments regarding the use of AI in their respective domains. These assessments came from nine federal agencies, including the Departments of Defense, Transportation, Treasury, and Health and Human Services, and have been submitted to the Department of Homeland Security.

To lower the barrier of entry into AI and democratize access to the best AI resources available, the order called for the launch of a National AI Research Resource pilot. This program aims to provide researchers and academic institutions with computing power, data, software, and training infrastructure by pooling resources from public and private entities to catalyze innovation.

To address the need for more AI expertise within the federal workforce, the administration has enacted what it calls the ‘AI Talent Surge,’ an initiative that accelerates the hiring of AI professionals to meet the rising demand for technological expertise.

On the education front, the EducateAI Initiative is in place to provide funding for educators to create comprehensive and inclusive AI educational programs from K-12 through to the undergraduate level. The purpose of this initiative is to prepare the next generation of workers for the challenges and opportunities presented by AI.

Lastly, to solve problems in the healthcare industry, the Department of Health and Human Services has established an AI Task Force to navigate the regulatory landscape and stimulate AI innovation within healthcare. This Task Force has also set out to develop methods to mitigate racial biases within healthcare algorithms, ensuring that AI tools in the health sector operate equitably and justly.

Challenges and opportunities of advanced AI

Recently, generative AI systems have become much more sophisticated and widespread among both businesses and consumers. Although this brings numerous advantages to multiple industries, it also comes with several challenges. On the consumer front, we typically hear concerns about AI’s potential to disrupt labor markets and its role in the creation and spread of disinformation and misinformation, and on the government front, there are concerns about safety, data privacy, and ethics.

Many of the actions mandated by the executive order revolve around solving those problems. They put barriers in place that decrease the risk of powerful/large-scale AI systems and models from ending up in the wrong hands. They promote educational awareness around AI and democratize access to it so that a broader demographic can access the tools and resources that are typically exclusive to tech conglomerates to spur innovation, and the last tranche of actions aim to identify and dismantle the threats AI poses to various government departments and the public at large.

This is a proactive approach by the government. It attempts to identify risks and threats while simultaneously implementing solutions designed to mitigate damage or lead to beneficial outcomes. This approach is quite different than the route we see most governing bodies take when it comes to new technology, where actions and orders typically happen post-mortem after significant amounts of damage were done by the technology at hand.

Overall, these efforts are meant to position and keep America as an AI leader.

This update we are seeing from the Biden Administration is most likely just a first checkpoint when it comes to its Executive Order on AI. As these actions and the programs they’ve created really begin to hit their stride, they will likely have a ripple effect that catalyzes a shift towards more transparent and accountable AI systems, more inclusive AI solutions, and more workforce development around AI skills.

In order for artificial intelligence (AI) to work right within the law and thrive in the face of growing challenges, it needs to integrate an enterprise blockchain system that ensures data input quality and ownership—allowing it to keep data safe while also guaranteeing the immutability of data. Check out CoinGeek’s coverage on this emerging tech to learn more why Enterprise blockchain will be the backbone of AI.

Watch: What does blockchain and AI have in common? It’s data

Recommended for you

Lorem ipsum odor amet, consectetuer adipiscing elit. Elit torquent maximus natoque viverra cursus maximus felis. Auctor commodo aliquet himenaeos fermentum

Lorem ipsum odor amet, consectetuer adipiscing elit. Accumsan mi at at semper libero pretium justo. Dictum parturient conubia turpis interdum

11-10-2024

11-10-2024